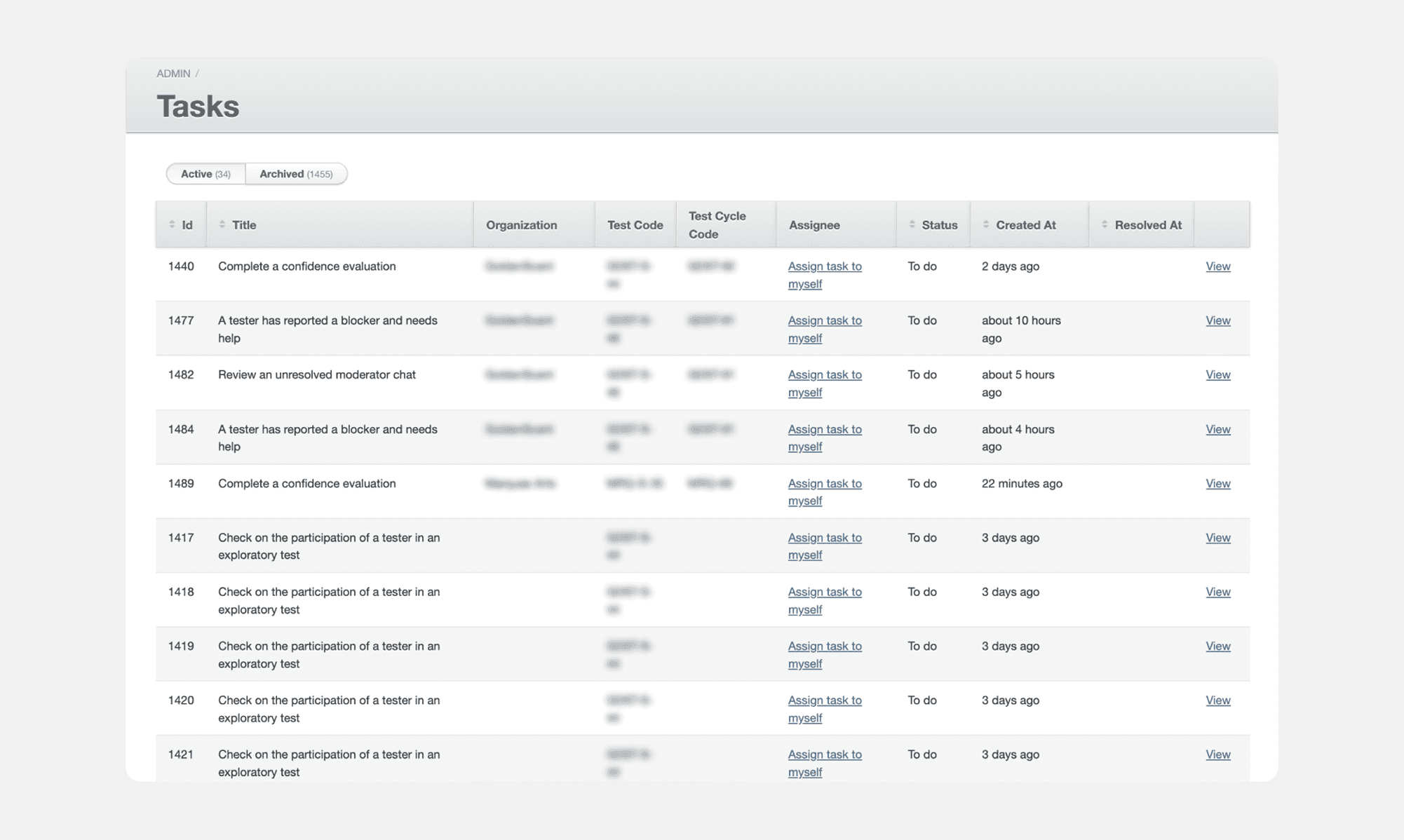

Task system

/ My contribution & overview

Systems design

UX/UI design

User research

Prototyping

Global App Testing is a crowd-testing software QA platform that relies on manual workflows to complete much of the tests requested by customers. This limited the efficiency and scalability of our scaleup business. I designed a system of rules and conditions that automates tasks and assigns others to human test managers.

/ The result

🏆 GAT's test manager to customer ratio improved from 1:2 to 1:6

🏆 The amount of test manager time taken up by manual interventions decreased from 90%+ to <40%

🏆 We generate structured data that highlights opportunities for automations and optimisations

🏆 We operate tests with a system-first approach that will enhance the adoption of future improvements

/ The process

The problem

GAT as a business relied heavily on manual processes and workers to complete the testing activities requested by customers. This manual dependency posed a risk for GAT as it limited the scalability of the business and reduced the profitability of our tests. We needed to improve how GAT runs tests through automations and efficiencies.

User research & discovery

Succeeding in this project required a deep understanding of the existing processes and requirements for tests to be managed to completion. I interviewed numerous stakeholders from Ops to understand exactly what goes into running a test.

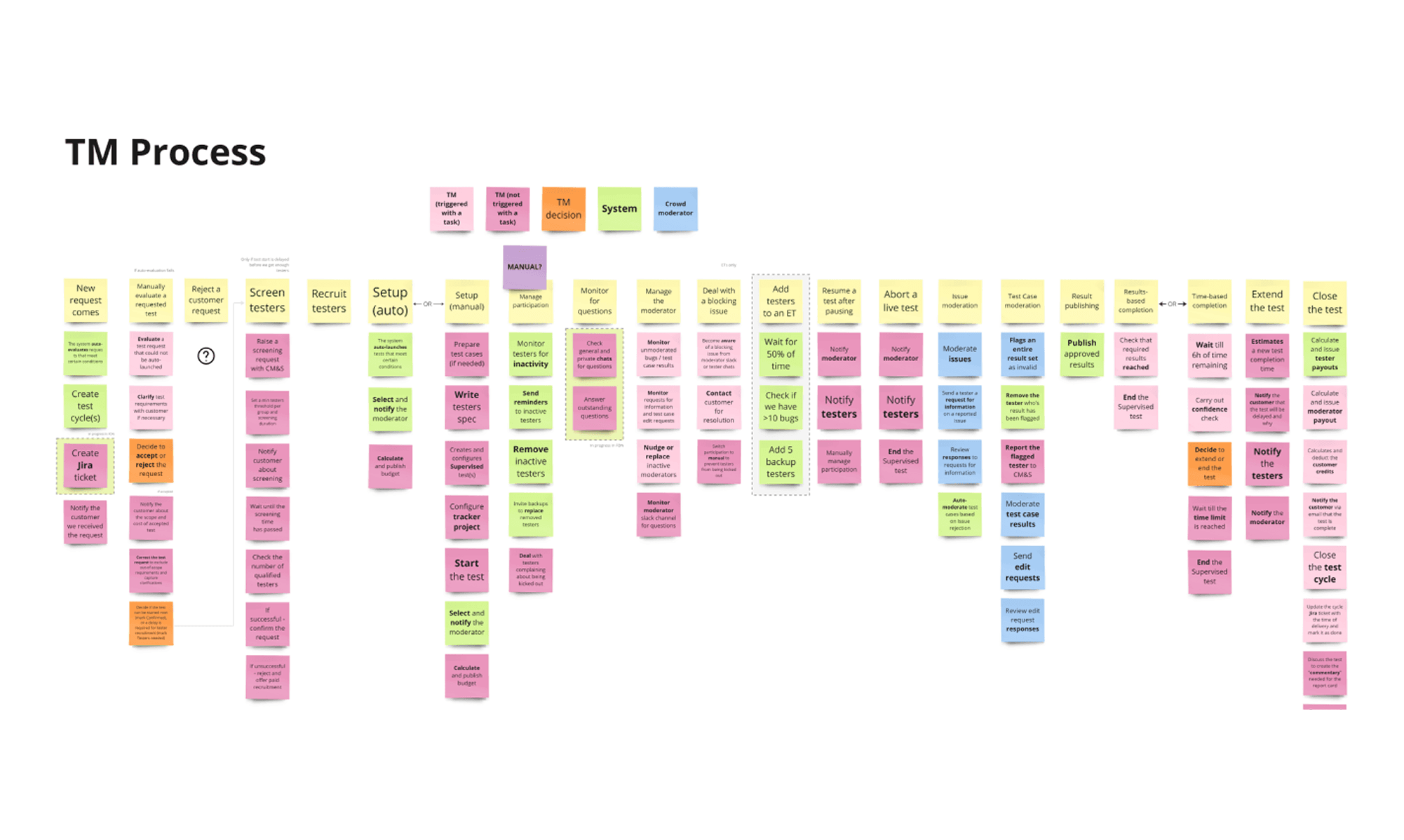

Myself and my team began constructing a map of the test manager process. We used this to uncover the tasks and triggers involved in running a test. I then used this to highlight visually where in the process the system has visibility, where it doesn't and where there are opportunities for automation.

I took a critical view of the process, looking for opportunities to remove tasks or change them, rather than simply re-creating the existing process.

Re-framing the problem

As soon as I began user research, it became clear that there was a different root cause problem that needed to be addressed. Much of the work being done by test managers was invisible. It was either being done outside of the GAT platform, or wasn't generating structured data that we can use as a trigger for automations and standardization.

How might we reveal and generate structured data for all tasks completed by test managers?

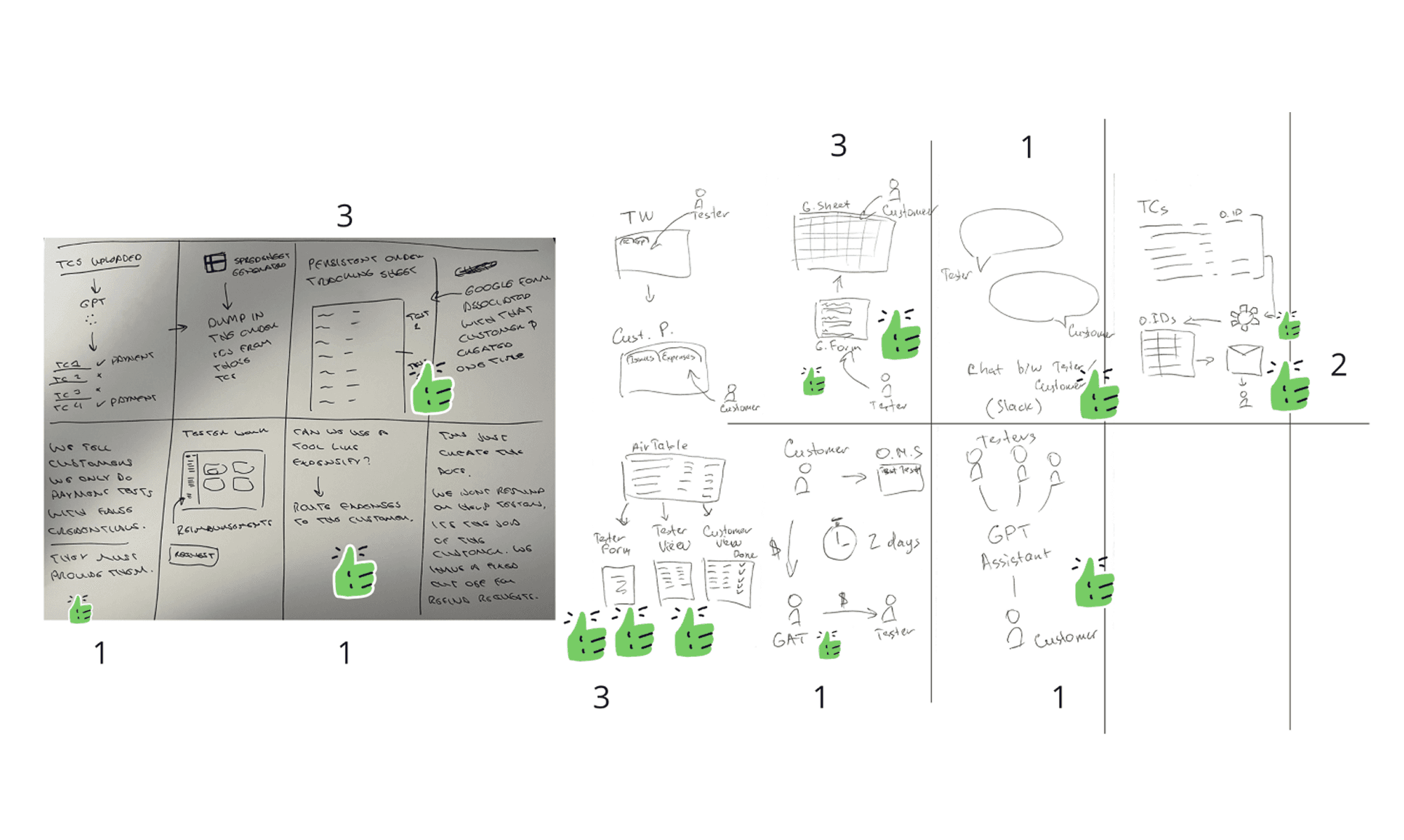

Idea generation & the solution

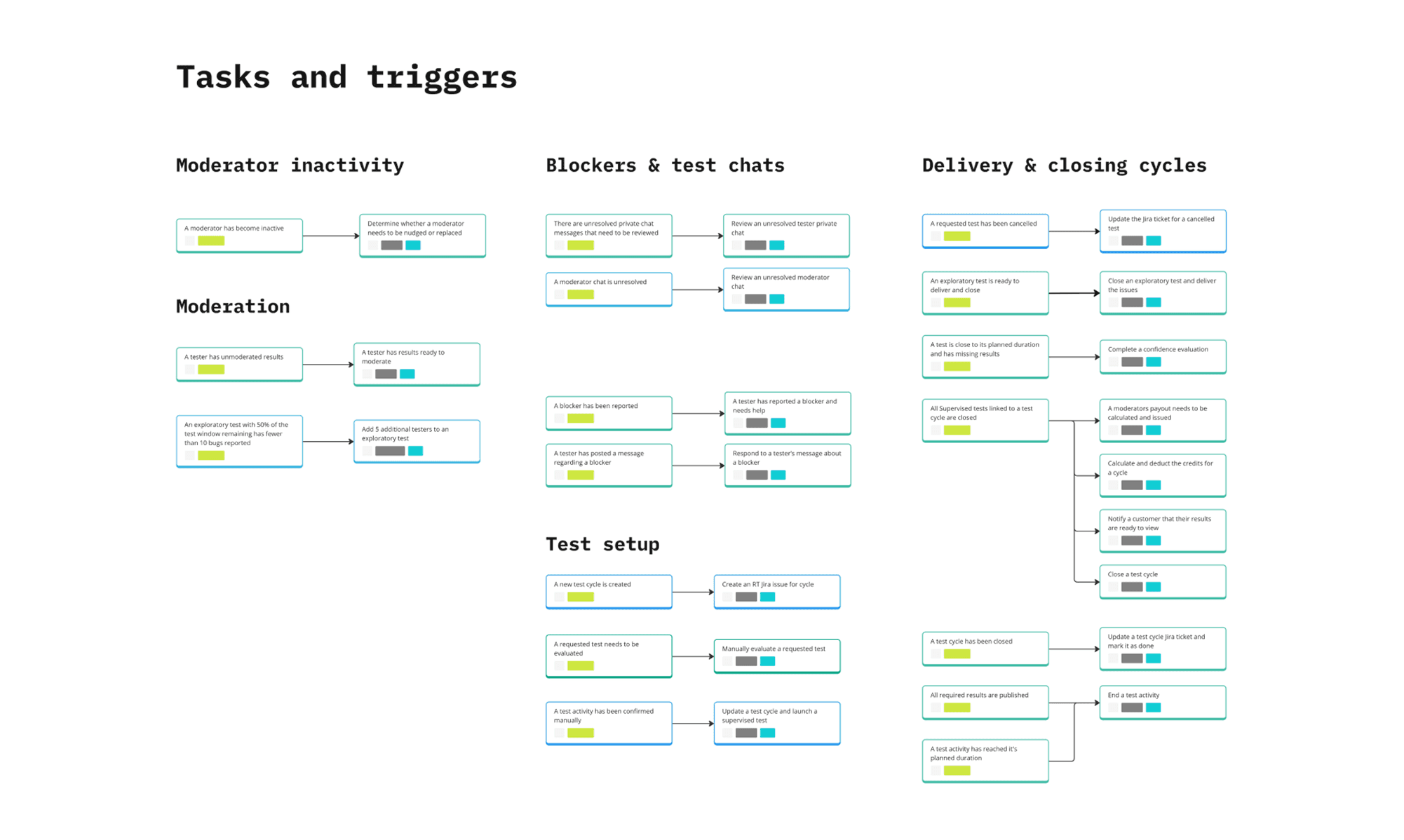

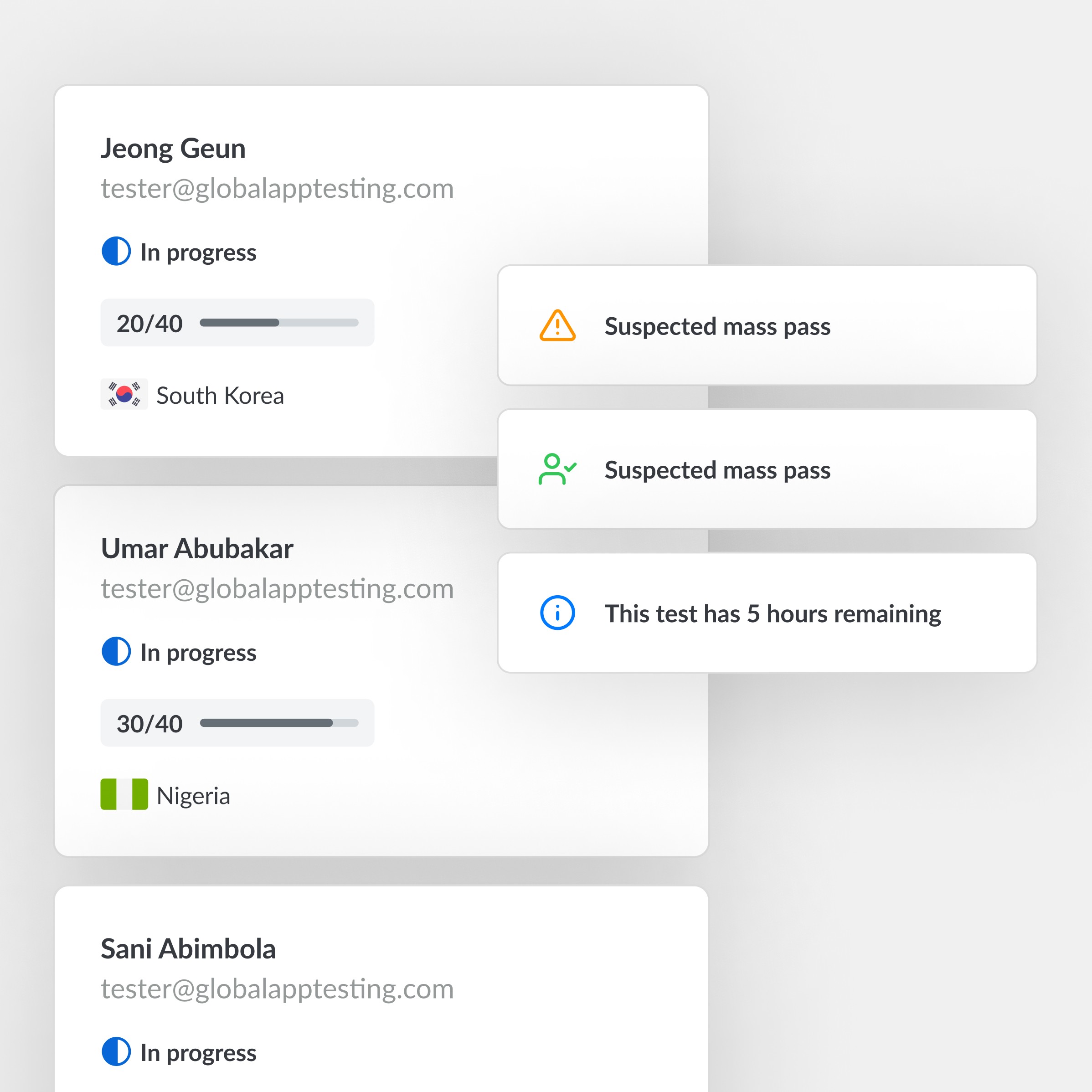

After a number of design workshops, the direction I chose to pursue was to avoid individual features and to instead develop a system of tasks and triggers. Actions than can be automated, will be automated, and for those that can't conditions are defined that if met cause a task to be generated for a human to complete. For example, conditions that indicate that a tester requires support. Most importantly, the system can be wrong and FAIL. These failures can be used to improve the system, without humans making invisible allowances for an imperfect design.

/ The result

🏆 GAT's test manager to customer ratio improved from 1:2 to 1:6

🏆 The amount of test manager time taken up by manual interventions decreased from 90%+ to <40%

🏆 We generate structured data that highlights opportunities for automations and optimisations

🏆 We operate tests with a system-first approach that will enhance the adoption of future improvements